How to Whitelist Our Crawlers

Fluxguard is a vital part of enterprise security monitoring. Learn how to ensure our crawlers are whitelisted in edge protection systems. What is a web crawler? Our friends at OxyLabs have a great discussion, but, briefly, a crawler is automated software—such as Fluxguard—that accesses web content. Sometimes, for various reasons, edge protection systems attempt to block crawlers.

Edge protection systems—Web Application Firewalls (WAFs) in particular—are powerful software and hardware “firewall” solutions. They can limit automated “crawlers” from accessing their sites. The rationale is that automated access can make sites prone to distributed denial of service (DDoS) attacks. The downside of these WAF rules is that even reputable crawlers—such as Fluxguard—can be denied access.

WAF rules to limit crawler activity take many forms. Some are basic: they block certain user agents or IP addresses from known data centers. Others are more complex and utilize machine learning to identify automated access and—over time—may block crawlers. As such, if your site is currently accessible, it does not guarantee access in the future.

Let’s dig in.

Are you sure you need to whitelist or exempt our crawlers? Reducing the configuration footprint is recommended. It’s better to run our software with as few configurations as possible. This reduces errors and overall crawling “brittleness.” Whitelist only when necessary.

Comprehensive whitelisting can be tricky. Our multi-cloud crawling infrastructure is a mix of function-as-a-service and “standalone” servers. Moreover, we leverage various caching solutions to minimize third-party touch. It’s impossible to guarantee that crawling will occur from a single IP address (or even range). At the same time, WAFs and other systems often have overlapping rules that can be difficult to configure.

By and large, whitelisting is necessary if edge protection systems result in systematic blocks of Fluxguard. This will often manifest in the form of “forbidden” or other 4xx and 5xx error messages. Depending on your edge protection system, these prohibitions can be subtle to detect: you might find that the crawl never concludes, for example.

User-Agent Whitelisting

This method is advisable to assure the use of specific user-agents across our crawling stack. While we can utilize certain IP address monitoring, this is not recommended as it will limit overall functionality that relies on our distributed multi-cloud crawling stack.

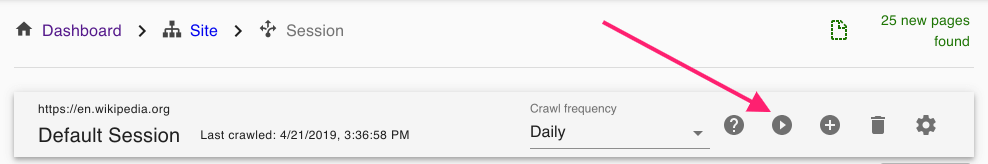

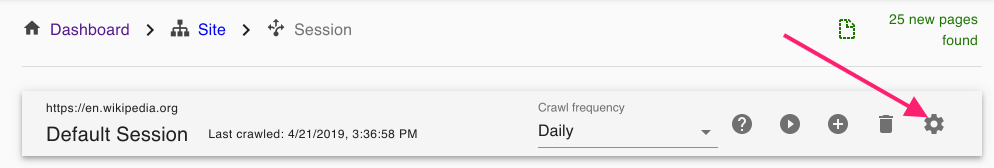

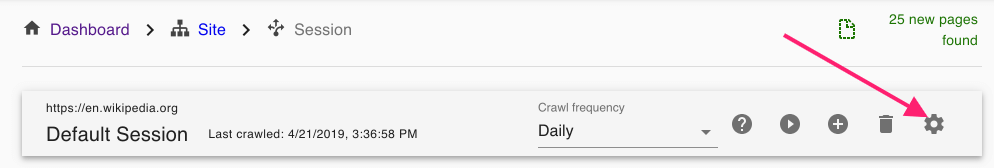

1.Click into Session Settings

Go to the Crawl tab.

2.Add a custom User Agent

Enter anything you like here. We recommend using something related to your use case. This will make it improbable that, besides Fluxguard, anyone will use this user-agent to bypass your WAF.

3.Add the User Agent in the WAF exemption area

This step is specific to your WAF system. Include the added user agent to the whitelist rules in the WAF settings area.

Use this time to ensure that adding this exemption will whitelist our crawlers 100%. Some WAF requirements support various overlapping rules, for instance. Make sure that adding this user agent bypass is sufficient for a comprehensive exemption of our crawlers.

4.Start a new crawl to determine everything works as intended

Enhanced Proxies

If you are not able to whitelist our crawlers, you may consider utilizing more advanced proxy networks available in Fluxguard. These proxy networks are not foolproof, but are often able to access difficult-to-obtain content.

1.Click into Session Settings

Go to the Crawl tab.

2.Adjust Proxies

You will notice several options to add and adjust proxy networks. For difficult-to-obtain, we recommend our Enhanced and Super-enhanced proxies. These will cost additional credits as noted next to each option.